Unknown Facts About Deepseek Made Known

페이지 정보

본문

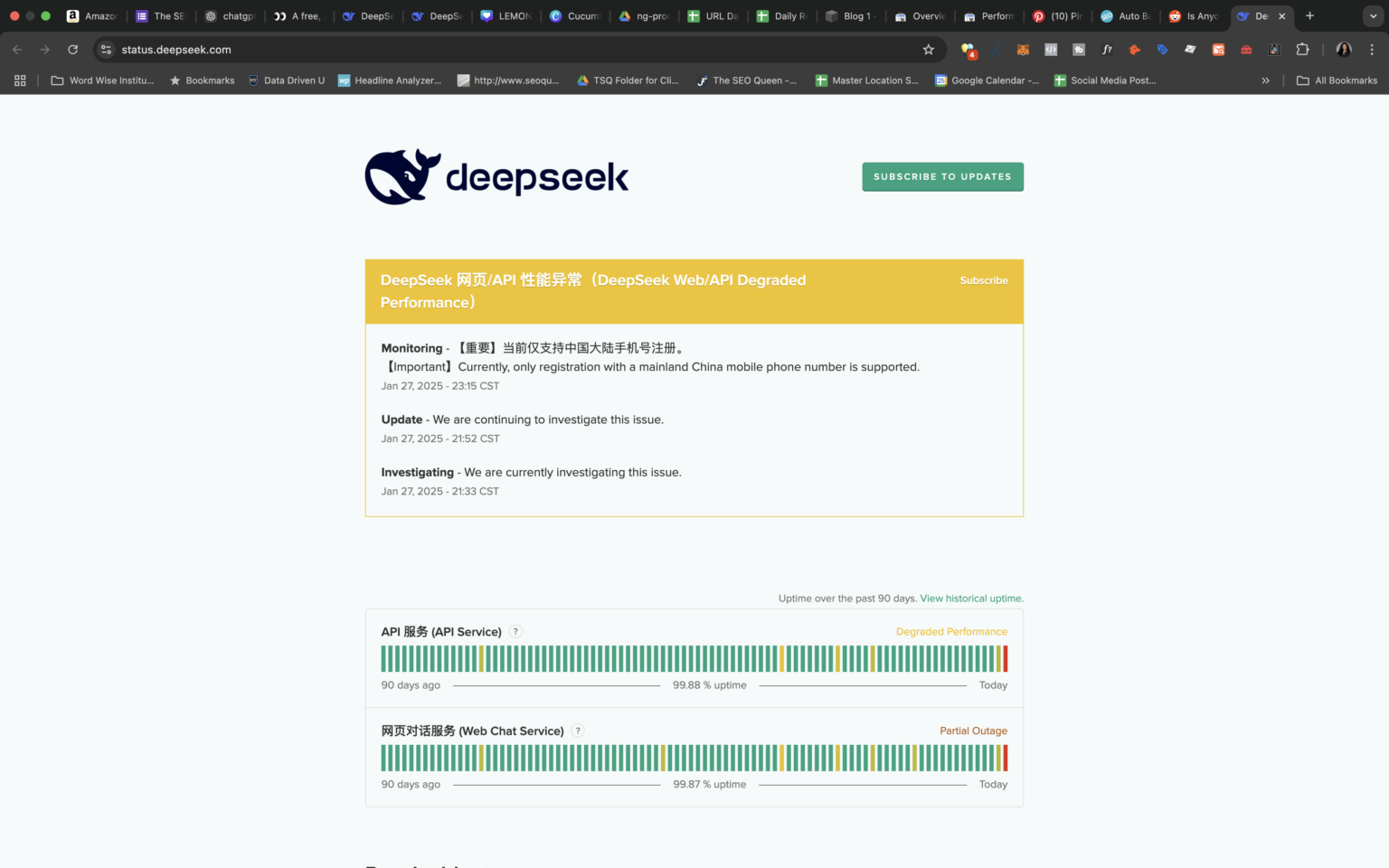

Anyone managed to get DeepSeek API working? The open supply generative AI movement might be troublesome to stay atop of - even for these working in or protecting the sphere similar to us journalists at VenturBeat. Among open fashions, we've seen CommandR, DBRX, Phi-3, Yi-1.5, Qwen2, DeepSeek v2, Mistral (NeMo, Large), Gemma 2, Llama 3, Nemotron-4. I hope that further distillation will happen and we are going to get nice and succesful fashions, excellent instruction follower in vary 1-8B. To this point fashions below 8B are manner too fundamental compared to larger ones. Yet positive tuning has too excessive entry level in comparison with simple API entry and immediate engineering. I don't pretend to understand the complexities of the fashions and the relationships they're skilled to type, but the truth that powerful fashions will be trained for an affordable quantity (compared to OpenAI raising 6.6 billion dollars to do a few of the identical work) is fascinating.

Anyone managed to get DeepSeek API working? The open supply generative AI movement might be troublesome to stay atop of - even for these working in or protecting the sphere similar to us journalists at VenturBeat. Among open fashions, we've seen CommandR, DBRX, Phi-3, Yi-1.5, Qwen2, DeepSeek v2, Mistral (NeMo, Large), Gemma 2, Llama 3, Nemotron-4. I hope that further distillation will happen and we are going to get nice and succesful fashions, excellent instruction follower in vary 1-8B. To this point fashions below 8B are manner too fundamental compared to larger ones. Yet positive tuning has too excessive entry level in comparison with simple API entry and immediate engineering. I don't pretend to understand the complexities of the fashions and the relationships they're skilled to type, but the truth that powerful fashions will be trained for an affordable quantity (compared to OpenAI raising 6.6 billion dollars to do a few of the identical work) is fascinating.

There’s a good amount of dialogue. Run DeepSeek-R1 Locally without cost in Just 3 Minutes! It pressured DeepSeek’s domestic competition, including ByteDance and Alibaba, to cut the usage costs for some of their fashions, and make others fully free deepseek. If you would like to track whoever has 5,000 GPUs on your cloud so you will have a way of who is succesful of coaching frontier fashions, that’s relatively easy to do. The promise and edge of LLMs is the pre-educated state - no need to gather and label data, spend money and time training own specialised models - just prompt the LLM. It’s to even have very massive manufacturing in NAND or not as cutting edge production. I very much might determine it out myself if needed, but it’s a transparent time saver to right away get a appropriately formatted CLI invocation. I’m attempting to determine the correct incantation to get it to work with Discourse. There will probably be payments to pay and proper now it doesn't appear like it's going to be corporations. Every time I read a put up about a new mannequin there was a press release comparing evals to and difficult fashions from OpenAI.

There’s a good amount of dialogue. Run DeepSeek-R1 Locally without cost in Just 3 Minutes! It pressured DeepSeek’s domestic competition, including ByteDance and Alibaba, to cut the usage costs for some of their fashions, and make others fully free deepseek. If you would like to track whoever has 5,000 GPUs on your cloud so you will have a way of who is succesful of coaching frontier fashions, that’s relatively easy to do. The promise and edge of LLMs is the pre-educated state - no need to gather and label data, spend money and time training own specialised models - just prompt the LLM. It’s to even have very massive manufacturing in NAND or not as cutting edge production. I very much might determine it out myself if needed, but it’s a transparent time saver to right away get a appropriately formatted CLI invocation. I’m attempting to determine the correct incantation to get it to work with Discourse. There will probably be payments to pay and proper now it doesn't appear like it's going to be corporations. Every time I read a put up about a new mannequin there was a press release comparing evals to and difficult fashions from OpenAI.

The mannequin was skilled on 2,788,000 H800 GPU hours at an estimated price of $5,576,000. KoboldCpp, a fully featured net UI, with GPU accel across all platforms and GPU architectures. Llama 3.1 405B trained 30,840,000 GPU hours-11x that utilized by DeepSeek v3, for a mannequin that benchmarks slightly worse. Notice how 7-9B fashions come close to or surpass the scores of GPT-3.5 - the King mannequin behind the ChatGPT revolution. I'm a skeptic, especially because of the copyright and environmental points that come with creating and running these providers at scale. A welcome results of the elevated effectivity of the models-both the hosted ones and the ones I can run regionally-is that the vitality usage and environmental influence of running a immediate has dropped enormously over the past couple of years. Depending on how much VRAM you have got on your machine, you might be capable to benefit from Ollama’s ability to run multiple fashions and handle a number of concurrent requests by utilizing DeepSeek Coder 6.7B for autocomplete and Llama three 8B for chat.

We release the deepseek ai LLM 7B/67B, together with each base and chat models, to the general public. Since launch, we’ve additionally gotten affirmation of the ChatBotArena rating that locations them in the top 10 and over the likes of latest Gemini pro models, Grok 2, o1-mini, and so forth. With only 37B energetic parameters, this is extremely appealing for a lot of enterprise functions. I'm not going to start using an LLM day by day, but reading Simon over the past year helps me think critically. Alessio Fanelli: Yeah. And I believe the opposite big factor about open source is retaining momentum. I feel the last paragraph is the place I'm still sticking. The subject started as a result of someone requested whether he still codes - now that he is a founding father of such a big company. Here’s everything it's worthwhile to find out about Deepseek’s V3 and R1 fashions and why the company may essentially upend America’s AI ambitions. Models converge to the identical levels of performance judging by their evals. All of that suggests that the models' performance has hit some pure restrict. The know-how of LLMs has hit the ceiling with no clear answer as to whether the $600B funding will ever have reasonable returns. Censorship regulation and implementation in China’s leading models have been efficient in limiting the vary of attainable outputs of the LLMs with out suffocating their capability to reply open-ended questions.

For more on deep seek look at our own page.

- 이전글9 Things Your Parents Teach You About Darling Hahns Macaw 25.02.01

- 다음글10 Tilt And Turn Timber Window Mechanism Tricks Experts Recommend 25.02.01

댓글목록

등록된 댓글이 없습니다.