What You must Know about Deepseek And Why

페이지 정보

본문

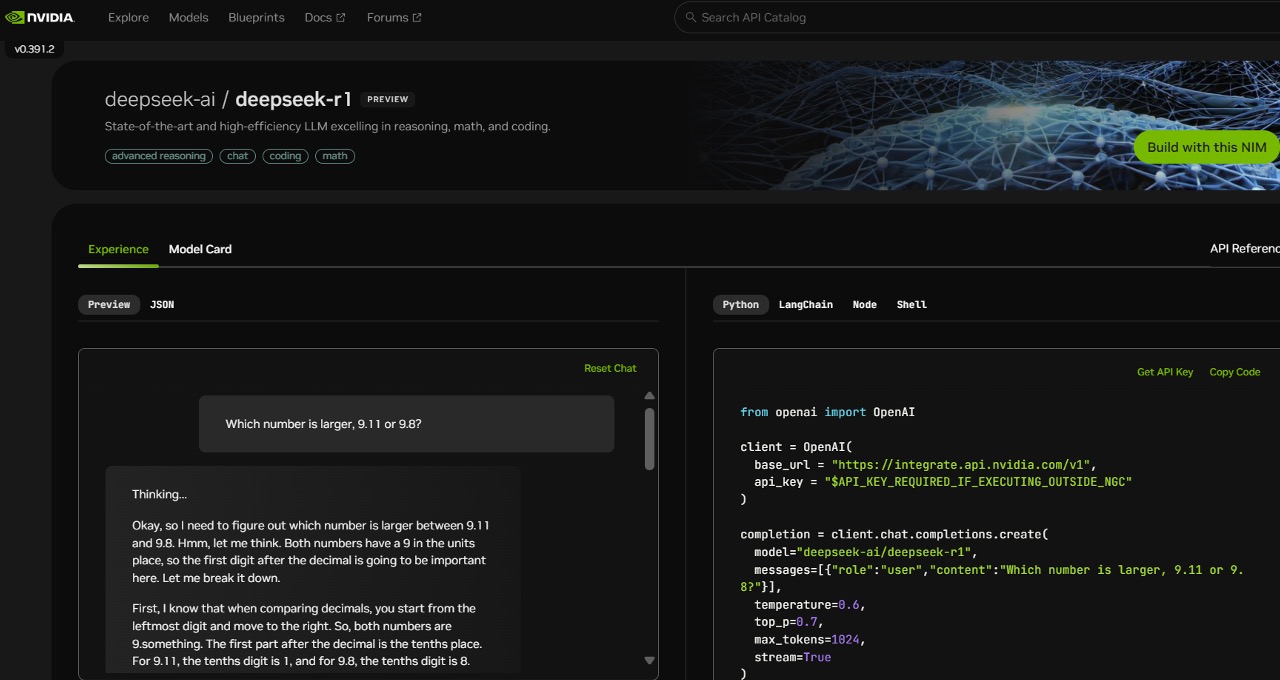

Now to another DeepSeek giant, DeepSeek-Coder-V2! Training information: Compared to the original DeepSeek-Coder, DeepSeek-Coder-V2 expanded the coaching knowledge significantly by including a further 6 trillion tokens, rising the overall to 10.2 trillion tokens. On the small scale, we practice a baseline MoE mannequin comprising 15.7B whole parameters on 1.33T tokens. The full compute used for the deepseek ai V3 model for pretraining experiments would possible be 2-4 instances the reported number in the paper. This makes the model faster and more efficient. Reinforcement Learning: The mannequin makes use of a extra subtle reinforcement studying method, including Group Relative Policy Optimization (GRPO), which makes use of suggestions from compilers and check cases, and a discovered reward mannequin to high-quality-tune the Coder. For instance, in case you have a chunk of code with something lacking within the center, the mannequin can predict what must be there primarily based on the encompassing code. We have explored DeepSeek’s method to the event of superior models. The larger model is extra powerful, and its architecture relies on DeepSeek's MoE strategy with 21 billion "active" parameters.

On 20 November 2024, DeepSeek-R1-Lite-Preview turned accessible via deepseek ai's API, in addition to via a chat interface after logging in. We’ve seen enhancements in overall user satisfaction with Claude 3.5 Sonnet throughout these customers, so on this month’s Sourcegraph launch we’re making it the default model for chat and prompts. Model dimension and structure: The DeepSeek-Coder-V2 mannequin comes in two foremost sizes: a smaller model with sixteen B parameters and a larger one with 236 B parameters. And that implication has trigger an enormous stock selloff of Nvidia leading to a 17% loss in inventory value for the corporate- $600 billion dollars in worth decrease for that one firm in a single day (Monday, Jan 27). That’s the largest single day greenback-value loss for any firm in U.S. DeepSeek, one of the refined AI startups in China, has revealed particulars on the infrastructure it makes use of to prepare its models. DeepSeek-Coder-V2 uses the same pipeline as DeepSeekMath. In code enhancing skill DeepSeek-Coder-V2 0724 will get 72,9% rating which is the same as the newest GPT-4o and higher than any other models except for the Claude-3.5-Sonnet with 77,4% score.

7b-2: This model takes the steps and schema definition, translating them into corresponding SQL code. 2. Initializing AI Models: It creates instances of two AI models: - @hf/thebloke/deepseek-coder-6.7b-base-awq: This mannequin understands pure language directions and generates the steps in human-readable format. Excels in both English and Chinese language tasks, in code era and mathematical reasoning. The second mannequin receives the generated steps and the schema definition, combining the data for SQL era. Compared with DeepSeek 67B, DeepSeek-V2 achieves stronger performance, and meanwhile saves 42.5% of training costs, reduces the KV cache by 93.3%, and boosts the utmost era throughput to 5.76 times. Training requires important computational sources due to the huge dataset. No proprietary knowledge or training tips have been utilized: Mistral 7B - Instruct model is an easy and preliminary demonstration that the bottom mannequin can simply be tremendous-tuned to attain good efficiency. Like o1, R1 is a "reasoning" model. In an interview earlier this year, Wenfeng characterized closed-source AI like OpenAI’s as a "temporary" moat. Their preliminary try and beat the benchmarks led them to create models that had been slightly mundane, just like many others.

7b-2: This model takes the steps and schema definition, translating them into corresponding SQL code. 2. Initializing AI Models: It creates instances of two AI models: - @hf/thebloke/deepseek-coder-6.7b-base-awq: This mannequin understands pure language directions and generates the steps in human-readable format. Excels in both English and Chinese language tasks, in code era and mathematical reasoning. The second mannequin receives the generated steps and the schema definition, combining the data for SQL era. Compared with DeepSeek 67B, DeepSeek-V2 achieves stronger performance, and meanwhile saves 42.5% of training costs, reduces the KV cache by 93.3%, and boosts the utmost era throughput to 5.76 times. Training requires important computational sources due to the huge dataset. No proprietary knowledge or training tips have been utilized: Mistral 7B - Instruct model is an easy and preliminary demonstration that the bottom mannequin can simply be tremendous-tuned to attain good efficiency. Like o1, R1 is a "reasoning" model. In an interview earlier this year, Wenfeng characterized closed-source AI like OpenAI’s as a "temporary" moat. Their preliminary try and beat the benchmarks led them to create models that had been slightly mundane, just like many others.

What's behind DeepSeek-Coder-V2, making it so special to beat GPT4-Turbo, Claude-3-Opus, Gemini-1.5-Pro, Llama-3-70B and Codestral in coding and math? The performance of DeepSeek-Coder-V2 on math and code benchmarks. It’s educated on 60% source code, 10% math corpus, and 30% pure language. That is achieved by leveraging Cloudflare's AI fashions to understand and generate natural language instructions, that are then transformed into SQL commands. The USVbased Embedded Obstacle Segmentation problem aims to address this limitation by encouraging improvement of progressive options and optimization of established semantic segmentation architectures which are efficient on embedded hardware… This can be a submission for the Cloudflare AI Challenge. Understanding Cloudflare Workers: I began by researching how to make use of Cloudflare Workers and Hono for serverless functions. I constructed a serverless application utilizing Cloudflare Workers and Hono, a lightweight internet framework for Cloudflare Workers. Building this application concerned a number of steps, from understanding the requirements to implementing the solution. The applying is designed to generate steps for inserting random information into a PostgreSQL database after which convert these steps into SQL queries. Italy’s data protection agency has blocked the Chinese AI chatbot DeekSeek after its developers did not disclose how it collects user information or whether it's stored on Chinese servers.

Should you loved this post and you want to receive details about ديب سيك i implore you to visit our web-site.

- 이전글A Guide To Address Collection In 2024 25.02.01

- 다음글The Most Effective Advice You'll Receive About Power Tools Stores Near Me 25.02.01

댓글목록

등록된 댓글이 없습니다.